The woes of coding by assumption

In a project we have a scheduler type service that polls an Azure Queue for work and does it, e.g. sending some emails. I've been confused about our number of storage transactions ever since I joined the project. It was at least 100 times more than my back of an envelope calculation estimated there should be.

I installed Application Insights to try it out and, after I published, it informed me that one of the apps (the scheduler) was sending 250+ requests per second to Azure Queues. What the hell right?

I took at look at the code and it was the sort of thing I was expecting:

All looks good right? The entry point called Start() which in turn sets up a timer to called PollQueue(null) after the specified _queuePollingInterval which was defined as:

Spot the mistake now? _queuePollingInterval == 0. It was looping as fast as possible which seems to have been about 250 times a second! We should have been using the TotalMilliseconds property.

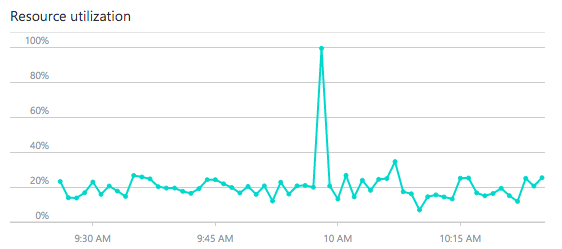

This sort of thing should have been caught at the time of writing but someone assumed they knew what the Milliseconds property would return and didn't bother checking. That simple change knocked 15% cpu utilization off the service and cut our storage transactions by about 99.6%.

I installed Application Insights to try it out and, after I published, it informed me that one of the apps (the scheduler) was sending 250+ requests per second to Azure Queues. What the hell right?

I took at look at the code and it was the sort of thing I was expecting:

void Start()

{

_timer = new Timer(PollQueue, null, _queuePollingInterval, Timeout.Infinite);

}

void PollQueue(object state)

{

// check the queue for new work and do it.

if (_timer != null) _timer.Change(_queuePollingInterval, Timeout.Inifinte);

}All looks good right? The entry point called Start() which in turn sets up a timer to called PollQueue(null) after the specified _queuePollingInterval which was defined as:

int _queuePollingInterval = TimeSpan.FromSeconds(1).Milliseconds;Spot the mistake now? _queuePollingInterval == 0. It was looping as fast as possible which seems to have been about 250 times a second! We should have been using the TotalMilliseconds property.

int _queuePollingInterval = Convert.ToInt32(TimeSpan.FromSeconds(1).TotalMilliseconds)This sort of thing should have been caught at the time of writing but someone assumed they knew what the Milliseconds property would return and didn't bother checking. That simple change knocked 15% cpu utilization off the service and cut our storage transactions by about 99.6%.

Comments

Post a Comment