5 Lessons from failure

Today I messed up.

I'm a strong believer in sharing experiences of failure. If no one talks about the times they make mistakes, we're going to be doomed to repeat the same errors over and over again. So, here goes...

Today I made a big mistake in a production application that resulted in 15 minutes of frantic database scripting and partial service interruption. I'm going to share what I did wrong, in part so that I focus on what I can do better next time but also because I don't want other people to make the same mistakes. Here's what happened.

In our application we have some entities which use a natural key rather than a database generated primary key. This can make life easier when querying as you reduce the number of joins but still have referential integrity (with the cost of a bit of denormalisation). In the past, the whole DAL was hand coded SQL so it saved a considerable amount of developer time; we now use an ORM so we really don't care about the SQL 90% of the time. I wrote a script that migrated one such entity from a string primary key to a int (identity) primary key and migrated all of the data over. This script had to be run on the SQL Azure instance just after VIP swapping the deployments so that we had the right code running on the right database schema. I did a few practice runs locally, and everything looked fine: the script worked quickly - about 15 seconds - and there would be very minimal breakage if the old code hit the new schema or vice versa. All ready to go!

I waited until 2am in the country that the site services (working in a different timezone is awesome sauce), deployed the code and set the Azure VIP Swap on the cloud service going. I ran script 1 - all fine. I ran script 2 - red, red, red. The migration script had run part of the work but had thrown an error as what I was trying to do wasn't supported in my version of SQL.

A few (15!) tense minutes later I had unpicked all of my borked changes, rolled back the code, and learnt a few lessons.

1. Backups are meaningless. Restores matter

Like a 'good' developer, I backed up my database before starting out. However, after I had messed up the database I realised that I had no plan to actually implement restoring it. In this case, I quickly saw what was missing and managed to rectify it within a couple of minutes.

2. Test on the same environment as production

This should really be obvious. I should have tested my script on a SQL Azure instance. However, development is faster using a local db instance with the app running outside the azure emulator. The error in my migration script was caused by not being able to drop a clustered index in SQL Azure. I know that because I've come up against it before but because I was happily scripting against a full fat SQL 2012 instance I forgot.

The script(s) I wrote assumed that they would always run to completion. I didn't even consider that it might not work as it had worked during my testing. A transaction would have saved me a lot of anxiety.

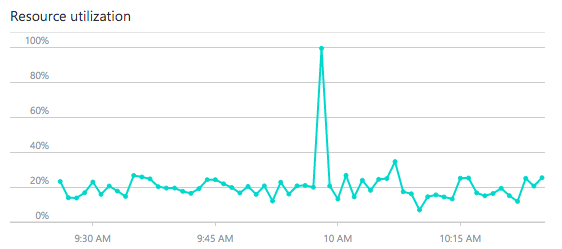

I can't tell you how many times that ELMAH or Application Insights have helped me track down an odd bug. There are some things that you can't test for - e.g. users doing unexpected things or crawlers sending odd requests. A saved stack trace with the exact url being hit is invaluable.

I'm thankful that when I told the boss that I'd just hobbled the production app, his reply was: "Don't worry, everyone messes up. I once deleted several million rows of survey data. How are you going to fix it?".

Let's celebrate and learn from our mistakes.

Comments

Post a Comment